OpenAI Codex Review: The Best Code-Generator in 2025 ?

What is Codex?

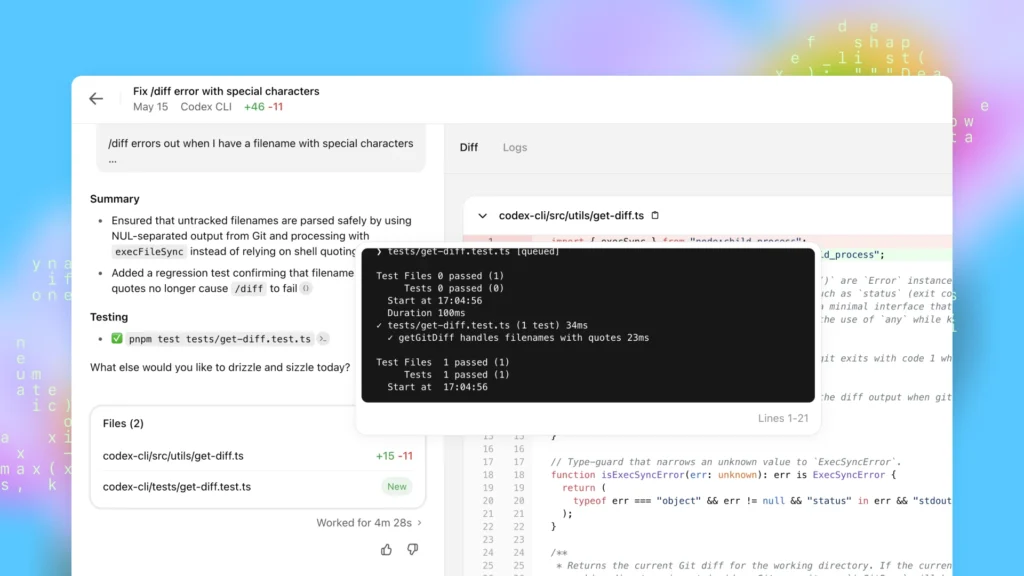

OpenAI Codex is a specialized coding agent built to transform how developers write, review, and maintain software. According to OpenAI, Codex can generate features, fix bugs, run tests, and even propose complete pull requests—all while working from real-world codebases.

Unlike simple autocomplete tools, Codex operates as an “agentic” developer assistant: it understands context, navigates your repository, and optionally runs commands or tests in sandboxed environments.

Here’s a breakdown of OpenAi Codex—where it shines, where it struggles, and whether it’s worth integrating into your workflow.

Strong Points

1. High-quality code generation & refactoring of OpenAi Codex

With the launch of GPT‑5‑Codex (a version of Codex optimized for software engineering tasks), OpenAI reported that it achieved a 51.3 % accuracy on large refactor tasks versus 33.9 % for its predecessor.This means it’s better at handling multi-file changes, intricate dependencies, and long-running tasks more reliably.

2. Review & QA capabilities baked-in

Codex isn’t just about writing code—it can also perform code reviews, run tests, analyze diffs, and validate pull requests. OpenAI says it’s been used internally to catch critical flaws before human review.

3. Deep toolchain & IDE integration

Whether you use the CLI, IDE extensions, or the cloud version, Codex integrates with your workflows: GitHub, VS Code, terminal, Slack. It runs in sandboxed environments to improve security.

4. Supports both interactive and long-form tasks

Codex adjusts its reasoning based on the complexity of the job. For small edits it is fast and lightweight; for major refactors it can “think” longer and take more time.

Limitations & Areas to Watch

1. Pricing & access

Though included in higher-tier ChatGPT plans (Plus, Pro, Business, Enterprise), broader access remains limited and may involve rate limits or credits.

2. Not perfect—still needs human oversight

Despite strong results, Codex can still generate buggy or insecure code. According to external evaluations it may memorize code samples or produce sub-optimal structures.

3. Mixed user experiences

Community feedback suggests variation in performance—some users praise its output, others report declines in quality or slower performance over time.

4. Cyber-legal & ethical caution

Using AI-generated code introduces questions around license compliance, ownership, and bias. Developers should review generated code, especially for production use.

Who Should Use Codex?

Busy devs or teams wanting to streamline recurring tasks like boilerplate generation, tests, or refactoring.

Tech leads and code reviewers looking to augment QA workflows and catch hidden bugs.

Developers experimenting with multi-agent workflows, auto-deploy pipelines, or advanced code automation.

If you’re writing simple one-file scripts or building trivial programs, Codex might feel like overkill—and you may get similar benefit from lighter tools. But for enterprise or team workflows, it shines.

Codex marks a major step forward in AI-powered software engineering. With stronger context understanding, in-depth repository navigation, and code review capabilities, it’s far more than an autocomplete tool—it’s a coding partner.

That said, it’s not flawless. You should still review outputs, monitor pricing, and stay aligned with ethical best practices. Used wisely, however, Codex can significantly boost productivity and help engineering teams ship with more confidence and fewer errors.

Leave a Reply